Start with what hurts most. Most people are looking for a checklist on how to implement DevOps, but it’s rarely that simple. The problem is that every group is at different stages of their DevOps transformation. So, where each journey “starts” will be different for every organization.

However, DevOps is also a culture that builds upon itself, with each layer building on the foundation of what’s come before, so in this regard there are building blocks that you need to have in place before you can build the next layer. I often refer to this as the “LEGO” approach. You have a bunch of individual building blocks, and in some organizations, you’ll have areas that are more mature with several blocks already put together into some basic structures and processes and you need to form a strategy to put them all together into one cohesive DevOps structure.

Here’s some of the basic building blocks that I think every organization would need in order to build a DevOps culture.

Metrics: We have to be able to measure where we are if we want to figure out where we need to go. Metrics will help us figure out where we are, what’s important to the organization, and how each step in the DevOps journey will help improve our process to add value for our end users. You need to be able to measure and report on them in order to reinforce positive behaviors. Find a couple of basic measurements and put that up on a dashboard somewhere for the entire team to see in order to help them focus on what we’re looking to improve.

Source Control: This is probably one of the most fundamental building blocks that a company must have in place before building out any other part of their DevOps journey. They have to have their code in a place where it can be accessed and reviewed and see what changes have been made. This is fundamental to being able to protect their code, perform check-ins, peer reviews, and get an understanding of just what exactly it is they are pushing through the delivery pipeline.

This is also where we start to build out some of our other metrics. Things like code churn and active files become easy to identify, even some of the more ambiguous metrics like lines of code are at least available to us in order to wrap our arms around just how big a project is or how much technical debt might be lurking under the covers.

Work Item Tracking: Use some form of work item tracking for Features, Backlog Items, Bugs, and Tasks. I’m a big fan of Agile, so having a Product Backlog or Kanban Board visible to the entire group will allow them to focus on a goal. Tracking Work Items clearly lays out who is responsible for what and adds a level of accountability. Each iteration should have a clear definition of what “done” means for the team.

Continuous Integration: Having implemented a source control system that allows your code to be centralized means you now have a location where you can start building a Continuous Integration (CI) system that can retrieve the source code and perform Automated Builds, Unit Tests and Static Code Analysis (SCA), all of which can also help to contribute to some useful metrics on how well you’re doing. Developers should be encouraged to check their code in early and often so that we get constant feedback every time the project is built, tested, and packaged into build artifacts on a separate build machine (avoid the “It Works On My Machine” Syndrome).

When building your CI blocks, this is a great place to gather additional metrics where you can see that there are consistent green builds, all your Unit Tests are passing, and if you’re using tools like sonarQube or Fortify it will help to produce additional metrics around code quality, standards and technical debt where team members can visibly see the quality of the code through reporting dashboards and gain confidence in their builds.

Automated Deployment (Single Environment FIRST): This is where we start seeing a lot of synergy, but also a lot of issues with building trust in the process. Too often an over-eager engineer will try to automate the entire pipeline in one shot. Instead, start small working on automating the deployment to just a single environment, avoid trying to “Boil the Ocean” and instead work on ironing out the kinks with a single environment deployment. Build trust and confidence in the ability to deploy the code with no manual steps involved. Do this in a “throw-away” environment where you can deploy and destroy over and over again. Over time, other colleagues will see the amazing advantages to having and automated deployment and will invite you in to enable this for the other environments in your pipeline. But get your automated deployments dialed in first in the single environment, bullet-proof it and build that trust with the rest of the team that this is a process they can trust and have confidence in.

Automated Testing: Once we’ve got a fully automated and repeatable process to deploy the system to a test environment, work on automating the testing of that system with integration and coded UI testing. In the CI build, we run unit tests, but with a fully functional system we have the opportunity to run integration and load tests. Having the ability to automatically reconstitute an entire environment and run more advanced testing is critical to increasing the team’s velocity and reducing downtime due to simple mistakes that can be caught through basic function testing. However, be sure not to put all your trust in automation testing. These tests are great for scripting out positive testing, like being able to log in or query for known information, but not every test can be accounted for and there’s nothing like having a human at the helm to do exploratory testing.

The great thing about this is that it also becomes an iterative process, each time you build out a test it can be scripted out and you can build out your suite of tests so each iteration is more comprehensive than the last. I also want to point out that while Automated Testing is great for basic scenarios, but trying to maintain complex scenarios through scripts can sometimes be more trouble than it’s worth. One of the worst things you can have is Automated Tests that continually fail and are ignored because it’s chalked up to “Oh, that always fails when the interface changes” because now you’re training your team to ignore certain tests. Every scripted test should pass every single time before certifying the build.

Infrastructure As Code: Once we’ve got the deployment of the code and we can successfully deploy to a known set of servers or environments, the next step is to introduce the concept known as Infrastructure as Code (IaC) in the pipeline. By having your code and infrastructure configurations both stored in source control, this will enable your team to stand up new environments by simply changing some parameters in source control. Having parameterized scripts utilizing tools like Chef or Puppet, we can free up the manual process of logging onto VMWare portals to provision servers or setting up features like web servers that need to be configured before being able to successfully deploy to the environment, further reducing the chance of human error.

Monitoring: This is one of those areas that often gets overlooked. Once the iteration is over and the latest code is pushed to production, we often forget that operating the system is just as important as building it. But this is also one of the most critical pieces for business. We must monitor our code and our systems as it is running in production to ensure we are delivering value to our end users. This should not just be limited to the usual performance and exception monitoring either. Monitoring should help answer the more interesting questions to the business, like how the end users are using the application, what features they use the most that might benefit from being expanded as well as features that may not be used at all and can be trimmed from the system in a future iteration. Someone once explained to me that feature curation like running a restaurant. As you operate the restaurant you expand the menu and enhance certain meals but if nobody orders certain items then you take them off the menu. No restaurant would ever try to have 400 items on the menu and do all the shopping and everything else that goes with having those menu items that nobody ever orders, so why would you maintain 400 features in your system that nobody ever uses?

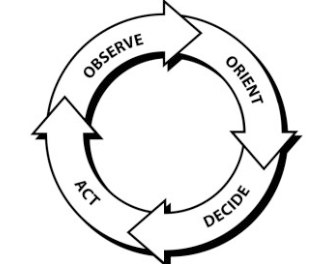

Continuous Improvement: If something isn’t broken that doesn’t mean you can’t improve it. As you move through the process of integrating different tools and processes into your delivery pipeline, think about how you can speed up the activities that take the longest or contain the most risk. Review your workflow constraints and think about how you can reduce cycle times. DevOps is not just about automating your pipeline. Sometimes you need to go back and rearchitect your existing system in a way that enables it to efficiently deploy in a way that does not impact your users. A lot of systems aren’t built with that capability of handling sophisticated DevOps deployment strategies like Blue-Green deployments, canary builds, rings, and other strategies that enable zero downtime while creating low-risk releases. But these are exactly the opportunities you’re going to want to target as your DevOps journey matures.

As many people like to say, “A journey of a thousand miles begins with the first step.” The DevOps journey is no different, it starts with a few small steps and you start to move down the maturity road. Not everyone starts in the same place, but it’s a road we all walk down together. DevOps is about the journey and not the destination.